Talking Shop with:

Willem Sundblad — CEO and Co-Founder, Oden Technologies, Inc.

TB – Before you give me your view on analytics, let’s talk about Oden Technologies. I have a hunch. If I put an “i” in there instead of an “e” it would be Odin, the Norse mythology king with a single eye. He traded one of his eyes so that the one remaining could see everything and be tapped into all data. Is that how the company got its name?

Willem – Exactly. I’m from Sweden, where you can keep the “e”, and that is the Swedish name for Odin (Oden).

TB – Ok, got it. Let’s dive in. Data is power, knowing data is power, being able to see everything is powerful. Tell me about today’s analytics and the data we are dealing with, and the ability to see it all.

#BIGDATA

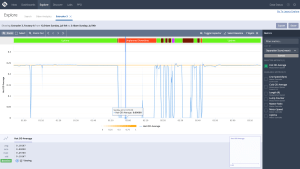

Willem – For a lot of manufacturers, the lines and systems generate so much information, that it’s almost impossible for people to make sense of it. So people end up being very reactive. I think that’s ultimately where we can use computers, essentially, to help people be a lot more proactive, because computers can crunch data much faster at a much greater volume than people ever can. A typical extrusion line will generate 5 million events every single day. And you just can’t have a person just look at all of that, because they just couldn’t be effective.

TB – And every event probably has more than one piece of information associated with it?

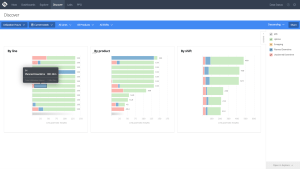

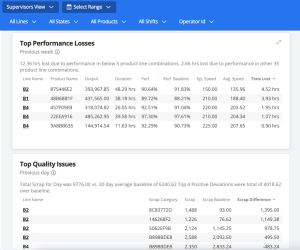

Willem – Yes, 100%. Looking at the journey to smarter factories, to better performing factories on a couple of different levels, we still have a lot of manufacturers that are earlier on in the digital maturity curve. The starting point, which we call guided analytics, is where we kind of curate, and help them prioritize what they need to do. So that might be things like prioritizing the biggest opportunities for performance improvements, for utilization improvements, for quality improvements, in generating data driven targets, just like the 75th percentile example that you brought up earlier [ED NOTE: during the background sharing part of the interview, I referenced the top quartile approach from some of Oden’s biggest client successes]. Because there is a lot of variance between operator behavior, let’s just raise the bar a little bit. And we’ve seen that that is a very good entry point, because it removes variability, drives value, and it’s easy to understand and consume. The next couple of layers adds more intelligence into the recommendation. So one example is, for a lot of customers, we see that there’s often an inherent lag in the production process where you get quality results several hours delayed from the process itself. Either that could mean that you just get in bad quality a couple of hours later, and you’ve wasted a lot of production, or what it more often means is that people run things very conservatively, in order to not waste production. If you can predict that offline quality test in real time, so that you know, in real time, that you’re making good products, it reduces the risk to improve the process in real time. We actually use that type of modeling to then prescribe the right set points for the customer to reach whatever outcome they want to achieve. If they want to lower the cost, lower the material consumption and lower energy consumption, increase the speed, then we actually give them the input parameters that they need to use in order to get a more efficient output.

And then the last step, which is more exploratory, which we’re working on now is also generating work instructions for the operators, kind of like an AI support system for the operator. Because still, and we recognize this, the big bottleneck for a lot of manufacturers is talent. Talent is very scarce, it’s very hard to hire a lot of people that can perform these processes, especially when they say that it’s more of an art than a science. We can lower the barrier to entry for operators to become top performers, through recommendations, predictions and generative AI for how to achieve high performance. By enabling operators to leverage science more than art or intuition, we can really change the game in terms of how we make things.

#AI #Analytics #WorkforceEnablement

TB – I have a contention that analytics has always been some type of AI and it’s just now getting the stage; well the world of true analytics with algorithms and node based decisions and things like that, it’s always been there. And it seems now to be just getting packaged a little differently. But if I’m hearing you correctly, regarding AI and analytics, you’re taking the view that they are complementary, that they coexist, and there’s a role or a way that we make them work to achieve a better outcome.

Willem – It’s all math. And, you know, when you think about the kind of simpler analytics that might be the right starting point for a lot of people, that is generating what’s the right target for this product on this line at the 75th percentile of operator behavior? This is statistics, right? That’s not AI, you’re right. But then when you move into a little more advanced set of capabilities, then you end up in the world of machine learning which is a subset of AI. So you can call that AI for sure. And that also comes in different flavors, depending on your use case. So predicting quality, as an example, might be a classical supervised machine learning problem, where we show the models, the examples, and the pattern recognition that you have to do. Now, when you see this pattern, yield this result in a linear regression for instance. You could also use deep learning, which is another subset of AI and machine learning to do things that don’t require supervised learning. So more like your clustering, or this thing looks like that thing, or these things look the same. That’s another really useful tool to understand the data in a better way.

With all the talk of AI currently in the market, with chatGPT and other types of GPT type functionality for generative AI, that structure is more how we’re thinking about those kinds of work constructions. So really then it becomes how do you create meaning to work so that you can predict what the best next work is to put in front? And so that could be used in the form of chatGPT. Let’s just generate a paragraph that the model thinks makes sense. But it can also be used in a search example. Let’s say that we are predicting that a certain type of failure mode is happening. We can then create a prompt to look through the knowledge database to determine and find the work instruction that exists today for this specific prompt. Then we consume that work instruction and generate the outputs in a friendlier way for the operator, or in a step by step view. Then maybe we understand that the work instruction has five steps. Now we see as an example that three of those steps in the work instructions could be 1) check the values for temperature 2) check the values for speed and 3) check the values for pressure. The operator doesn’t need to do those three steps because the system already knows all those values. Just feed it in instead. That is what I would call, in the current hype cycle of AI, is the only thing that is similar to the ChatGPT model. The other things are more classic machine learning problems.

#AI #MachineLearning #BusinessIntelligence #Analytics

TB – That’s really good stuff. Can you help us understand more about machine learning? I guess it’s what you just said, learning that patterns exist that say “the” is going to be the next word logically that makes sense after this string of characters, right?

Willem – I can give you a simple example. Let’s say that you want to predict an offline quality test. You have a lot of variables from the production line, as you’re making the product. These might be speeds, temperatures, pressures, modal loads, energy, material consumption, all these types of measurements, and then the output is a numerical test for the strength of the product. As an example, if you take three to four months of data history, you can probably train a machine learning model. And in this case, I’m going to say a linear regression, which really just means a polynomial expression like y=mx + b that defines the relationship between the input parameters and the output they yield. And so if you take all that history of data, you can find an equation for the process that, with a reasonably high degree of accuracy, defines what that offline quality test is going to be.

TB – I use some capabilities in Tableau [ED Note: Tableau is business intelligence software from Salesforce] where they’ve got some kind of ability to take a bunch of variables, determine if there’s a relationship, and the tool will generate a curve fit for the data. It gives you a polynomial equation. You can choose how many degrees you want, to continue to drive down the p-value, which I believe in statistics is a measure of how close the distribution is. And I guess that tells you how good the fit is.

Willem – Exactly. And so that’s a simple example of a supervised machine learning problem, where the answer is a linear model. There are many other types of algorithms that you could do, but those are really useful, because we found that they can have a good degree of accuracy. And we can show all the coefficients to the domain experts, so that they can do the smell test. Essentially you can say if speed goes up, we know we have a positive before the coefficient on it. We expect quality to go down in this regard, unless you also change these other variables. So you get an actual mathematical representation of the whole production process. And then you run that new equation against the real time streaming data, which spits out if you were testing right now, this is what that offline quality test value would be.

TB – And what you should expect in terms of deviation from the baseline. Correct?

Willem – Yes, so typically we see errors from between, you know, 1% to 5%. And I think you have to get a very high degree of accuracy, which just means that you need more data in order to not do the offline test. There might still be regulation requirements or customer demand for it though. But even going from 0% visibility to 95% provides more accurate visibility in real time, which is a game changer.

TB – Absolutely. Tell me a little bit about how you would compare and contrast business intelligence or existing business analytics tools with Oden Technologies cloud solutions.

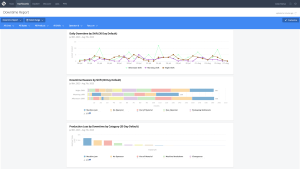

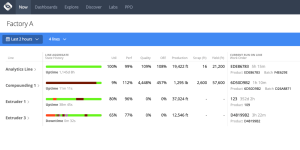

Willem – Our performance is real-time. You don’t have to “warm up” the queue, or make sure that all that data gets refreshed. Other tools require refreshing every 15 minutes or so. Most operator decisions are sooner than every 15 minutes. Or you refresh your BI (Business Intelligence) data every day so that it’s eventually consistent. BI is really good for taking historical relational data to do all types of reporting and slicing, dicing, and delayed analysis. Part of the problem with manufacturing data is that it’s a fairly unique combination of an incredible amount of time series data and an almost arbitrary amount of joins to that data. If you just look at the production process as a whole, you have those 5 million events that happened per line during the day. But you also want to know what operator was on the line, what machine was running, from what machine maker, what material was being used from what supplier. What state was the line in every single one of these joins creates complexity in the query.

One of the things that we’ve done is that we’ve built a whole architecture that is really built for the messy nature of manufacturing data, because it’s sometimes late, it’s sometimes missing, sometimes out of order. And even if it’s not any of those things, you still end up with this very complex picture of many joins with a lot of data. And so you need a way to query that dynamically, very quickly. And that’s what we’ve done with both our data model and OQL, which is the Oden Query Language. This is where we’ve actually abstracted away all that data engineering that would have to happen in the background for a BI tool. It is abstracted away and we’ve built in manufacturing terms in it. So someone who knows manufacturing can write queries, not necessarily programming, in seconds instead of hours.

We then think that the right way to do this is actually tie our platform into the BI system, because then you get access to accurate useful manufacturing data or the BI tool. So like a corporation, a big corporation, they will have a BI tool where they also have finance, sales data, HR data, all these things, but manufacturing is a mess to get in there. But if we are an abstraction layer to that, they can use all that manufacturing data in a very intuitive and useful way across more areas of the business.

#GeneratingBenefits #IIoT #RampingToValue

TB – So let’s talk about how quickly we could get somebody up to speed on the Oden Technologies solution. What would that look like where you were converting them to an analytic environment, where your dashboards were on TV screens, your jumbotron dashboards were around the location? You don’t have to talk about executive sponsorship, because executive sponsorship has to occur for anything to be successful like this, because there’s going to be a change management factor.

Willem – So let me first start with where you can get to, and then how we get there. So just an example from a customer that we’ve had for about three years now. They are continuously rapidly expanding. In March of this year, they deployed 200 production lines – in one month.

TB – That’s impressive. How many lines and how many distinct locations?

Willem – About 3-5 locations, with over 200 lines. That’s not what people do as a start. Typically, in the first engagement before we put boots on the ground, assuming executive sponsorship and the right business case, we look heavily at the business case and help the customer do a type of variance analysis to focus the effort. It is important that the beginning goes well, from a value delivery and from a usage standpoint, and we want to make sure we are solving the biggest problem. Whether that’s focusing on performance, utilization, or quality, we’re going to know where to look first. That helps us also determine who to work with, what systems we need to tie into, and what the starting point is. We do an infrastructure assessment before the customer comes on board as well, because the time series data from all these machines, they’re going to come to us over MQTT.

That means that there’s got to be something on the line that sends the data to us in that format. And it could be Ignition, a fairly low cost SCADA system from the company Inductive Automation (www.inductiveautomation.com), or something like that, or an OPC UA server, but some form of Operational Technology Network (OT network) at the facility level that sends us that data. Then there’s all the context data that might come from an MES system or ERP system, like “what product am I making?” Targets are there with operators on the line for those things. Those usually come via another integration and API integration.

Typically this is all orchestrated well, so that before we sign a contract we know what the infrastructure looks like and we know what the business case is, then it should take 30-45 days before we can start first training. That is people using the tool. Typically, in our contracts we have a 90 day, pull the plug guarantee. The client can pull the plug and no questions asked get their money back. No one’s ever used that. Because we really try to make sure that we have in the 30 to 45 days good expectations of who’s going to do what. If a third party integrator needs to come in that probably means that our 30 to 45 day timers begin after that integrator has done that work. Then on day 45 is when we have the first user training, and then by day 90 the client may not have achieved all the success yet, but the client should have proven that this is going to get them to that goal that we set with the executive.

TB – There was an example on your website where a company had a 2x ROI. Did I read that right? They paid for it twice?

Willem – They paid for it twice – in six months. That number has grown significantly since then.

TB – Now I’m going to ask you to put on your CEO hat and talk in terms of strategy. What do you see on the horizon as the things that will have the biggest impact on your offering set or your target capabilities? Can you talk about the roadmap without giving away secrets? What’s big on the horizon?

Willem – So a couple of big things. The kind of machine learning recommendations, the Process AI capabilities that we’re calling it, those seem to be going really well in the market, so we’re going to continue to double down on those. We are continuing to invest in the kind of generative AI capabilities for that AI assistant for the operator because I really see that as a transformational capability for the industry right now.

As a company we have been growing really nicely over the last couple of years, but we’re still pretty small. So a big thing that we’re going to be doing is expanding our go to market team so that we can reach more people faster. And we are also starting to build up our own ecosystem. So integrations where one plus one equals three. And specifically, what I mean by that is, things like BI tools and data lakes, as well as creating more native and good collaboration and integration with us. All makes their systems stronger, because they get good, better accurate data knowledge, and we can show to the market where we fit into the ecosystem in a better way. And also, we open up channels to expand more aggressively without hiring everyone in house.

#CloudComputing

TB – I know your backbone is largely Google Cloud services, so I’m wondering why you didn’t mention Bard in your AI discussion.

Willem – So we’re doing stuff with PaLM 2 which is the language model behind Bard. [Ed Note: Google Bard, AI from Google, is built on the Pathways Language Model 2 (PaLM 2), a language model released in late 2022].

TB – Ok so at a much lower level than my interactions with Bard.

Willem – Most people have tried and seen Bard and ChatGPT. It’s interesting and not totally accurate. You can laugh about the responses. But you have to be accurate with what you give an operator. It requires a completely different execution model with AI. For instance, I asked ChatGPT about myself. It said that our company co-founder was my grandchild and that I passed away in 2018.

TB – What does this mean for a machine maker?

Willem – Machine makers are looking at this too. They’re thinking, “well, what is my role? What should I do? Should I be building out? Should we be partnering? What’s my world in the digital and AI future?” And what I’ve seen is that it really comes down to the fragmentation of the machine landscape. In your example during our background sharing, of the jet engines and the planes you have an example of an industry where the machine is kind of isolated so that it can produce the unit of work on its own, like a jet engine or even a Schindler elevator. Those providers build a tremendous amount of intelligence and capabilities into that. And they become machine-as-a-service.

TB – Oh, yeah. Well, they did the sensors as a service, right? I mean, that data coming off that jet engine, that has extra value.

Willem – But for most industries, like if you look at an extrusion line, you have granulators, blenders, dryers, extruders, measurement systems, pickups, and those are all machine makers.

TB – So then you touch on interoperability right? These different machines come to a layer of communication that makes one plus one equal three right?

Willem – Exactly. So for the machine makers on that type of landscape, the biggest thing that they can do, in my opinion, is focus on the interoperability and be the easiest thing to integrate. And also make sure that your machine is intelligent in its own capabilities, so that it doesn’t break, it adds the added value of analytics on their step.

TB – Okay, that is a wrap. Thanks for talking with us about Oden Technologies, AI, analytics and even IIoT.

Willem – You’re welcome!